In recent years, there have been significant advancements in the field of neural networks and Artificial Intelligence (AI). Microsoft, too, has developed a suite of Cognitive Services consisting of machine learning algorithms designed to address challenges in the realm of AI. These Cognitive Services APIs are organized into five distinct categories:

- Vision—analyze images and videos for content and other useful information.

- Speech—tools to improve speech recognition and identify the speaker.

- Language—understanding sentences and intent rather than just words.

- Knowledge—tracks down research from scientific journals for you.

- Search—applies machine learning to web searches.

In this tutorial, we will explore the utilization of identification with the Face API. Additionally, you can find a supplementary GitHub guide for character recognition using the Computer Vision API at the end of this article.

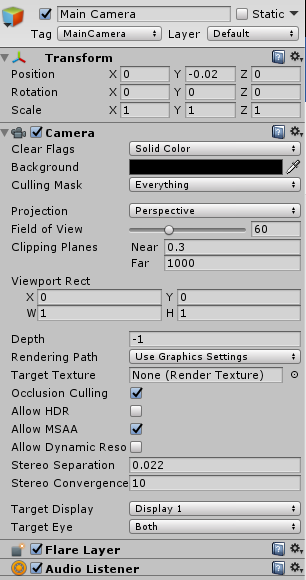

It’s crucial to configure the camera in the scene with a perspective projection and ensure it’s positioned correctly, as depicted in the image. Set the camera’s attributes to: Position (0, -0.02, 0), Rotation (0, 0, 0), and Scale (1, 1, 1). Essentially, the location you select for the camera will serve as the reference point for initializing other objects in the 3D space.

You’ll require two GameObjects. The first one, named ‘PhotoCaptureManager,’ will house the necessary scripts and handle the task of capturing photos from the HoloLens device. It will also be responsible for sending these photos to Azure Cognitive Services for processing.The second GameObject, named ‘AudioSource,’ will contain Audio Source components for playing back voice output generated from text-to-speech implementation. For instance, it can be used to convey phrases like ‘He is approximately 26 years old and appears to be happy.

Before you begin coding, it’s essential to create an Azure account and save the service key for use in your code. You can select the billing package that suits your needs. The F0 free package allows you to make up to 20 calls per minute and up to 30,000 calls per month.

Within the ‘PhotoCaptureManager’ GameObject, include the following scripts: ‘KeywordGlobalManager.cs,’ ‘JSONObject.cs,’ ‘PhotoCaptureManager.cs’ (refer to the ‘Take and Display Photos and Videos on HoloLens‘ guide for details), and ‘MCSCognitiveServices.cs.’ The ‘MCSCognitiveServices.cs’ script contains the web service integration for Azure Cognitive Services’ Face API. It handles tasks such as making requests to the server, parsing responses, and encapsulating them into a list of Face models.

Face Model

public class MCSFaceDto : MonoBehaviour {

public List<Face> faces { get; set; }

}

public class Face

{

public string faceId { get; set; }

public FaceRectangle faceRectangle { get; set; }

public FaceAttributes faceAttributes { get; set; }

public EmotionAttributes emotionAttributes { get; set; }

}

public class FaceRectangle

{

public int top { get; set; }

public int left { get; set; }

public int width { get; set; }

public int height { get; set; }

}

public class FaceAttributes

{

public int gender { get; set; } // male = 0 , female = 1

public float age { get; set; }

public FacialHair facialHair { get; set; }

}

public class EmotionAttributes

{

public float anger { get; set; }

public float contempt { get; set; }

public float disgust { get; set; }

public float fear { get; set; }

public float happiness { get; set; }

public float neutral { get; set; }

public float sadness { get; set; }

public float surprise { get; set; }

}

public class HeadPose

{

public float pitch { get; set; }

public float roll { get; set; }

public float yaw { get; set; }

}

public class FacialHair

{

public bool hasMoustache { get; set; }

public bool hasBeard { get; set; }

public bool hasSideburns { get; set; }

}

MCSCognitiveServices.cs

public class MCSCognitiveServices : MonoBehaviour {

//MCS Face API

public IEnumerator<object> PostToFace(byte[] imageData, string type)

{

bool returnFaceId = true;

string[] faceAttributes = new string[] { "age", "gender", "emotion" };

var url = string.Format("https://westus.api.cognitive.microsoft.com/face/v1.0/{0}?returnFaceId={1}&returnFaceAttributes={2}", type, returnFaceId, Converters.ConvertStringArrayToString(faceAttributes));

var headers = new Dictionary<string, string>() {

{ "Ocp-Apim-Subscription-Key", Constants.MCS_FACEKEY },

{ "Content-Type", "application/octet-stream" }

};

WWW www = new WWW(url, imageData, headers);

yield return www;

JSONObject j = new JSONObject(www.text);

if (j != null)

SaveJsonToModel(j);

}

private void SaveJsonToModel(JSONObject j)

{

MCSFaceDto faceDto = new MCSFaceDto();

List<Face> faces = new List<Face>();

foreach (var faceItem in j.list)

{

Face face = new Face();

face = new Face() { faceId = faceItem.GetField("faceId").ToString() };

var faceRectangle = faceItem.GetField("faceRectangle");

face.faceRectangle = new FaceRectangle()

{

left = int.Parse(faceRectangle.GetField("left").ToString()),

top = int.Parse(faceRectangle.GetField("top").ToString()),

width = int.Parse(faceRectangle.GetField("width").ToString()),

height = int.Parse(faceRectangle.GetField("height").ToString())

};

var faceAttributes = faceItem.GetField("faceAttributes");

face.faceAttributes = new FaceAttributes()

{

age = int.Parse(faceAttributes.GetField("age").ToString().Split('.')[0]),

gender = faceAttributes.GetField("gender").ToString().Replace("\"", "") == "male" ? 0 : 1

};

var emotion = faceAttributes.GetField("emotion");

face.emotionAttributes = new EmotionAttributes()

{

anger = float.Parse(emotion.GetField("anger").ToString()),

contempt = float.Parse(emotion.GetField("contempt").ToString()),

disgust = float.Parse(emotion.GetField("disgust").ToString()),

fear = float.Parse(emotion.GetField("fear").ToString()),

happiness = float.Parse(emotion.GetField("happiness").ToString()),

neutral = float.Parse(emotion.GetField("neutral").ToString()),

sadness = float.Parse(emotion.GetField("sadness").ToString()),

surprise = float.Parse(emotion.GetField("surprise").ToString()),

};

faces.Add(face);

}

faceDto.faces = faces;

PlayVoiceMessage.Instance.PlayTextToSpeechMessage(faceDto);

}

}

public class Constants

{

public static string MCS_FACEKEY = "---------Face-Key---------";

}

Additionally, a portion of these functionalities is implemented in the ‘PlayVoiceMessage.cs’ script, which is responsible for text-to-speech playback through the user’s speaker.

public class PlayVoiceMessage : MonoBehaviour {

public static PlayVoiceMessage Instance { get; private set; }

public GameObject photoCaptureManagerGmObj;

void Awake()

{

Instance = this;

}

public void PlayTextToSpeechMessage(MCSFaceDto face)

{

string message = string.Empty;

string emotionName = string.Empty;

if (face.faces.Count > 0)

{

EmotionAttributes emotionAttributes = face.faces[0].emotionAttributes;

Dictionary<string, float> emotions = new Dictionary<string, float>

{

{ "anger", emotionAttributes.anger },

{ "contempt", emotionAttributes.contempt },

{ "disgust", emotionAttributes.disgust },

{ "fear", emotionAttributes.fear },

{"happiness", emotionAttributes.happiness },

{"sadness", emotionAttributes.sadness },

{"suprise", emotionAttributes.surprise }

};

emotionName = emotions.Keys.Max();

message = string.Format("{0} is pretty much {1} years old and looks {2}", face.faces[0].faceAttributes.gender == 0 ? "He" : "She", face.faces[0].faceAttributes.age, emotionName);

}

else

message = "I could't detect anyone.";

// Try and get a TTS Manager

TextToSpeechManager tts = null;

if (photoCaptureManagerGmObj != null)

{

tts = photoCaptureManagerGmObj.GetComponent<TextToSpeechManager>();

}

if (tts != null)

{

//Play voice message

tts.SpeakText(message);

}

}

}Execute the application by issuing the voice command ‘How does this person look.’ Within a few seconds, you will receive a description from Cognitive Services, which will be relayed to you through text-to-speech

You can access the samples and download them from the following GitHub links: https://github.com/gntakakis/Hololens-MSCognitiveServicesFace-Unity and https://github.com/gntakakis/Hololens-MSCognitiveServicesOCR-Unity